Why you can ignore a leaking tap in your apartment but not a mosquito

The rich information offered by multiple senses typically benefits our information processing and behaviour in everyday situations. In social situations, where many people are speaking, seeing lip movements helps us understand what will be said next. Evidence suggesting that sounds improve perception of visual objects is found even in the laboratory, where stimuli are necessarily simplified. However, we still don’t understand how “automatic” – independent of what we’re doing or other circumstances the sounds appear in (the context) – these influences are.

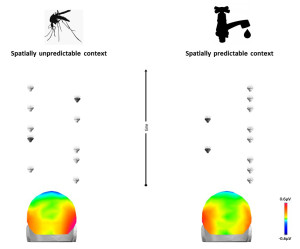

A schematic representation of the experimental setup. In spatially unpredictable contexts, participants passively listened to sounds presented with equal probability to the left and right space (rare sounds differed from others in frequency – darker-coloured items in the display). In spatially predictable contexts, participants passively listened to sounds presented on the majority of trials to one side of space (rare sounds now differed in the side of space they appeared in). Brain activity (electric current voltage, as measured on the scalp) was enhanced over the occipital cortex in the context where sounds were spatially unpredictable but not where they were predictable.

One widely investigated mechanism whereby sounds affect vision is the movement of our attention in space. Early theories of attention argued that these movements are automatic inasmuch as a sudden object appearing outside of where we’re currently focusing can attract our attention to its location, even when this object is unimportant to what we’re currently doing. Studies using a variety of methods jointly suggest that what determines the ability of such objects to capture attention in, typically visual, tasks is whether or not the object is visual. Visual objects need to be at least partly relevant to what we’re currently doing, but sounds seemingly capture our attention irrespective of our goals.

Recently, electroencephalography (EEG) helped to reveal a purported “brain signature” of the capture of attention across the senses: enhanced brain activity over occipital, nominally visual portions of the cortex (on the side opposite to that of sounds). EEG is a non-invasive method of recording electrical brain activity by placing sensors (electrodes) along the scalp. In the past studies, sounds were consistently found to activate occipital cortices even when they were not directly important to what participants were doing, leading some to argue that these activations, as well as the mechanism controlling attention movements, are “automatic”.

However, in virtually all those past studies the sounds were unpredictable in where they would appear next. Neuroscience is increasingly recognising that our brain is more of a proactive agent than a passive receiver: To reduce the ambiguity underlying recognising objects in everyday, noisy situations, the brain involuntarily tracks regularities in stimulus streams and creates predictions about the upcoming sensory events. To test the importance of predictions (that is, the context) for the capability of sounds to activate the occipital cortex, we recorded EEG from ten participants while they watched a muted, subtitled movie and listened to unimportant sounds that, across different blocks of experimental trials, differed in whether their location (in the left or right space) was regular and, so, predictable (Fig.1). We found that sounds activated the occipital cortex, but indeed only in blocks where the location of the sounds was not predictable.

Our findings explain why you can ignore a leaky tap in your apartment but not a pesky mosquito: mosquito’s movement trajectory is unpredictable. Together with other studies, these results portray predictions as a “double-edged sword” for the fate of events falling outside of our goals. Those that are actually helping us in what we’re currently doing are continuously processed and utilised; others are suppressed by the brain, thanks to their predictability. The current findings have direct applications to real-life situations (which typically contain stimulation across different senses). First, they provide much-needed evidence that automated alerts (“A meeting with Mr. X in 15 minutes!”) and in-car warning signals should be designed with sounds or touch/vibrations to effectively aid our – predominantly visual – functioning. More generally, when we need to focus but the distraction is unpredictable, for example, when other students are loud next to us in the library, the best idea might perhaps be to find another seat, rather than spend energy trying to ignore them.

Pawel J. Matusz1,2, Chrysa Retsa1, Micah M. Murray1,3,4

1The Laboratory for Investigative Neurophysiology (The LINE), Neuropsychology and Neurorehabilitation

Service and Department of Radiology, University Hospital Center and University of Lausanne,

Lausanne, Switzerland

2Attention, Brain, and Cognitive Development Group, Department of Experimental Psychology,

University of Oxford, UK

3EEG Brain Mapping Core, Center for Biomedical Imaging (CIBM) of Lausanne and Geneva,

Lausanne, Switzerland

4Department of Ophthalmology, University of Lausanne, Jules-Gonin Eye Hospital, Lausanne, Switzerland

Publication

The context-contingent nature of cross-modal activations of the visual cortex.

Matusz PJ, Retsa C, Murray MM

Neuroimage. 2016 Jan 15

Leave a Reply

You must be logged in to post a comment.